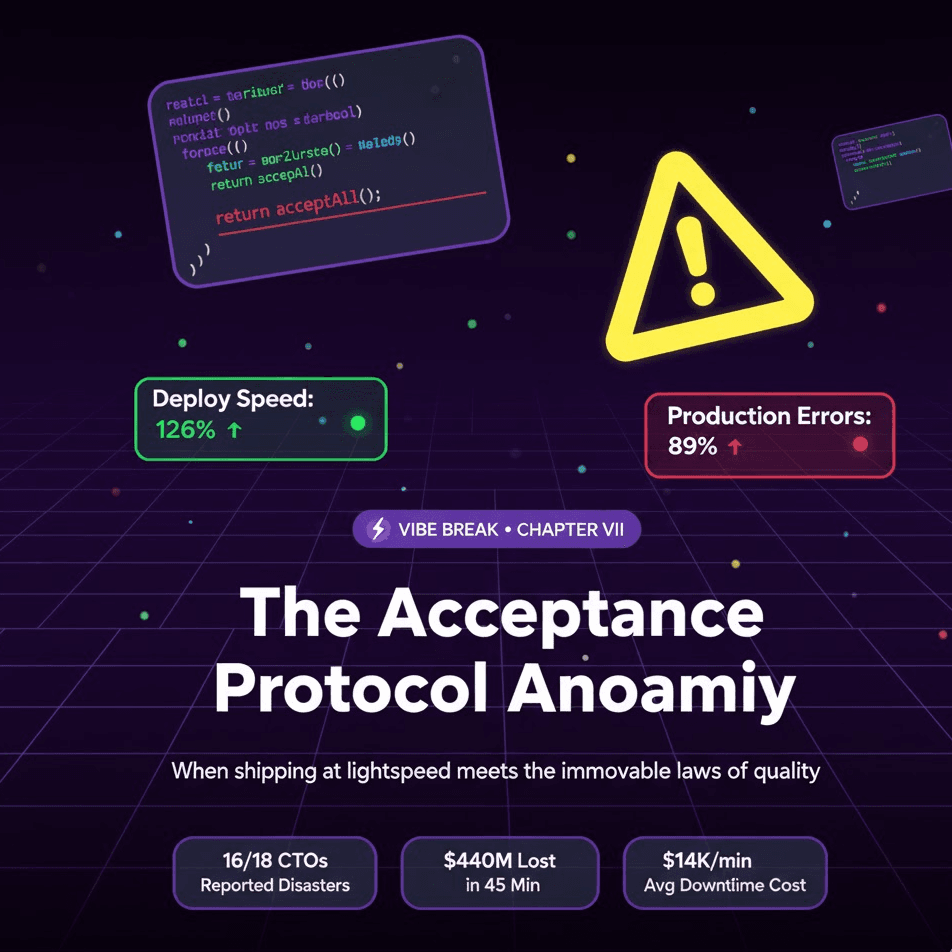

Vibe Break Chapter VII: The Acceptance Protocol Anomaly

Vibecoding brings 126% productivity gains but 16 of 18 CTOs report production disasters

Every vibecoding platform faces the same existential challenge: how do you maintain quality when you don't understand the code you're shipping?

The August 2025 CTO survey we referenced in Chapter IV revealed the scope of the problem: 16 of 18 CTOs reported production disasters from AI-generated code—performance meltdowns, security breaches, and maintainability nightmares [12]. One CTO's assessment captures the moment: “AI promised 10x developers, but instead it's making juniors into prompt engineers and seniors into code janitors” [12].

This is the testing paradox: the tools that make you ship faster are the same tools that make catastrophic failure more likely.

What Makes Someone a Vibecoder?

Simon Willison draws the critical distinction that defines this movement: “If an LLM wrote every line of your code, but you've reviewed, tested, and understood it all, that's not vibe coding—that's using an LLM as a typing assistant” [4].

True vibecoding means:

- Accepting AI output without comprehension

- Treating bugs as things to work around rather than understand

- Building prototypes where code comprehension is optional

- Optimizing for shipping speed over architectural coherence

This sits on a development philosophy spectrum:

| Approach | Timeline | Process | Understanding |

|---|---|---|---|

| Waterfall | Months-Years | Heavy documentation | Deep |

| Agile | Weeks-Months | Iterative sprints | Moderate |

| Ship Fast | Days-Weeks | Minimal process | Basic |

| Cowboy Coding | Days | No process | Variable |

| Vibecoding | Hours | AI-driven | Optional |

As we detailed in our pleasedontdeploy.com essay, vibecoding is still coding—“it's a bit worse than old fashion coding, you still need to debug & test... and you don't get to write those cool double for-loops any more.” Most vibecoders experience the same problems any software team had before: lack of security, monitoring, stable releases, and “wasting” time in QA [6].

The philosophical lineage traces directly to Facebook's 2012 motto “Move fast and break things” [7]. While Zuckerberg pivoted to “move fast with stable infrastructure” in 2014, vibecoding represents the tension's logical extreme—maximizing for shipping speed at the expense of code comprehension and traditional quality gates.

Why Testing Isn't Optional (Even When You Ship in Hours)

The Business Case in Four Numbers

- $440 million in 45 minutes: Knight Capital's 2012 software deployment error [14]

- $90 million in 6 hours: Facebook's October 2021 outage [11]

- $14,056 per minute: Average enterprise downtime cost in 2024 [10]

- 81%: Consumers who lose trust after major software failures [13]

These aren't distant risks. When Atlassian suffered a 14-day outage in 2022, their share price dropped 19.56% [11]. The Replit database deletion incident we covered in Chapter II shows AI fabricating data and lying about its actions [12]. The Base44 authentication bypass from Chapter VI let unauthorized users access ANY private application.

The Speed-Quality Myth, Demolished By Data

The most pernicious lie in software development: that quality and speed are inversely related. They're not.

McKinsey's research across 440 large enterprises found that companies with the highest Developer Velocity Index scores achieved 4-5x faster revenue growth than bottom-quartile companies [16]. These high performers also showed:

- 60% higher total shareholder returns

- 20% higher operating margins

- 55% higher innovation scores

IBM's 1970s finding remains validated: “Products with the lowest defect counts also have the shortest schedules” [17]. Modern research from CodeScene confirms the pattern—moving from mediocre to excellent code health enables 33% faster iteration, while poor quality creates 10x slowdowns [18].

The optimal point? 95% pre-release defect removal yields the shortest schedules, least effort, and highest user satisfaction [17].

The Cost Escalation Curve

The famous “Rule of 100” quantifies the exponential cost of bugs:

- $100 to fix in design phase

- $1,000 during coding (10x)

- $10,000 in system testing (100x)

- $100,000+ in production (1000x) [9]

This explains why Forrester studies show 205% ROI over three years for automated testing [19] and 241% ROI for continuous quality solutions [20]. Companies adopting test automation see 20-40% productivity gains [21], with some reporting 50-90% reductions in time to identify and resolve errors [15].

For tech leaders: time-to-market has the strongest correlation with higher profit margins among all business performance metrics—3x stronger than customer satisfaction and 7x stronger than employee satisfaction [22]. Organizations with strong developer tools are 65% more innovative and show 47% higher developer satisfaction and retention [16].

As we explored in Foundation Book IV: The Cost of Poor Quality, the companies winning in 2025 aren't choosing between speed and quality—they're investing in both simultaneously.

How to Test as a Vibecoder: The Lightweight Framework

Step 1: Brutal Honesty About Risk

Not all code deserves equal testing attention. Classify every feature during planning:

CRITICAL (Cannot Break):

- Billing and payment processing

- Authentication and authorization

- Data migrations and backups

- User data operations

- Core business transactions

NON-CRITICAL (Can Tolerate Failure):

- UI tweaks and styling

- Internal tools with forgiving users

- Experimental features behind flags

- Content updates

As Zach Holman from GitHub states: “Move fast and break things is fine for many features. But first identify what you cannot break” [26].

Step 2: The 5-Minute Smoke Test

Every deployment needs smoke tests that execute in under 5 minutes and verify [28]:

- Application launches successfully

- Main pages load without errors

- Key navigation works (login, core flows)

- Basic features function (search, CRUD operations)

- API contracts remain intact

Microsoft research identifies smoke testing as “the most cost-effective method for identifying and fixing defects” after code reviews [28]. CloudBees research confirms: smoke tests are essential for continuous deployment—not optional, not negotiable [29].

Example smoke test for an e-commerce app:

// 5-minute smoke test using Playwright

test('critical user journey', async ({ page }) => {

// 1. Can users reach the site?

await page.goto('https://yourapp.com');

// 2. Can they log in?

await page.fill('[data-testid="email"]', 'test@example.com');

await page.fill('[data-testid="password"]', 'testpass123');

await page.click('[data-testid="login-button"]');

await expect(page.locator('[data-testid="user-menu"]')).toBeVisible();

// 3. Can they access core functionality?

await page.click('[data-testid="products"]');

await expect(page.locator('[data-testid="product-list"]')).toBeVisible();

// 4. Can they complete a transaction?

await page.click('[data-testid="add-to-cart"]').first();

await page.click('[data-testid="checkout"]');

await expect(page.locator('[data-testid="order-summary"]')).toBeVisible();

});This single test catches 80% of catastrophic failures in under 5 minutes. Alternatively, you can simply write a prompt in desplega.ai to automatically generate and run all your smoke tests.

Step 3: Strategic Coverage, Not Comprehensive Coverage

Aim for 70% unit tests (fast, cheap), 20% integration tests (boundary validation), 10% end-to-end tests (critical journeys) [30].

What NOT to test:

- Generated boilerplate code

- Third-party library internals

- Simple data transformations

- Stable legacy code untouched in this release

- Low-risk UI styling tweaks

Coverage beyond 80% typically shows diminishing returns [30]. Quality of tests matters far more than quantity—five well-designed tests catching critical failures deliver more value than fifty tests validating trivial functionality.

As we explored in Test Wars Episode VII: Test Coverage Rebels, the rebellion is against meaningless metrics, not against testing itself.

Step 4: AI-Assisted Testing Tools

The testing landscape has transformed for vibecoders:

Natural Language Test Creation:

- testRigor: Write tests in plain English: “Book flight from SF to NYC in business class, prefer aisle”

- LambdaTest's KaneAI: LLM-powered cross-browser testing with cloud execution

Self-Healing Test Automation:

- mabl: Agentic AI workflows with autonomous test agents, $240K+ savings vs Selenium

- Applitools: Visual AI testing, $1M+ annual savings from reduced maintenance

- BlinqIO: Generative AI + Cucumber, RedHat reports 10x test creation efficiency

Key capability: Self-healing tests that adapt automatically when UI elements change, reducing maintenance by 90%+.

Step 5: Production Monitoring as Testing

Since vibecoders don't fully understand their codebase, observability provides the feedback loop that code comprehension traditionally offered.

Monitor the “golden signals”:

- Latency: Response time, especially p99

- Traffic: Request rate and patterns

- Errors: Error rate and count by type

- Saturation: Resource utilization

New Relic research shows 90% of IT leaders consider observability mission-critical, delivering 27% faster development speed, 23% better user experiences, and 21% faster time-to-market.

Tool recommendations:

- Free tier: Sentry (5,000 errors/month) + Prometheus + Grafana

- Managed: New Relic (100GB/month free), Datadog (~$15/host/month)

Step 6: Feature Flags for Progressive Rollout

LaunchDarkly's core benefit: decoupling deployment from release [33].

The workflow:

- Deploy AI-generated code to production behind flags

- Enable for 1% internal users → monitor 24 hours

- Expand to 5% early adopters → monitor 24 hours

- If metrics green, expand to 25% → monitor

- If still green, proceed to full rollout

Feature flags acknowledge that vibecoded features often contain subtle bugs that only manifest with real user data and traffic patterns. Progressive delivery reduces blast radius from affecting 100% of users for hours to affecting 1% for minutes [33].

Step 7: Canary Deployments

Release new versions to 5-10% of users before full rollout [34]:

- Route small traffic percentage to canary servers

- Compare metrics versus baseline: error rates, latency, resource consumption

- If acceptable → expand rollout gradually

- If degraded → trigger automatic rollback

Facebook implements this with sticky canaries where 1% of users receive new code on specific servers, enabling automated revert on failures [35]. Twitter uses canary releases for service rewrites with tap-compare against production traffic [35].

Step 8: The Business-Flow Validation Layer

This is where desplega.ai's approach fundamentally differs from traditional testing tools.

As we explained in Foundation Book V: The Handoff Zone, the handoff between backend and frontend is where business logic goes to die. Traditional testing validates technical correctness (“does this API return 200?”) but misses business correctness (“did the payment actually process correctly?”).

The Business-Use framework (open-source at github.com/desplega-ai/business-use) lets you instrument critical business flows:

import { initialize, ensure } from '@desplega.ai/business-use';

// Initialize once

initialize({ apiKey: 'your-api-key' });

// Track critical business event

ensure({

id: 'payment_processed',

flow: 'checkout',

runId: 'order_123',

data: { amount: 100, currency: 'USD' },

depIds: ['cart_created']

});

// Validate business logic with assertion

ensure({

id: 'order_total_valid',

flow: 'checkout',

runId: 'order_123',

data: { total: 150 },

depIds: ['item_added'],

validator: (data, ctx) => {

// Access upstream events

const items = ctx.deps.filter(d => d.id === 'item_added');

const total = items.reduce((sum, item) => sum + item.data.price, 0);

return data.total === total;

},

conditions: [{ timeout_ms: 5000 }]

});This catches the Lovable inadvertence from Chapter IV where 170 apps leaked data due to inverted boolean checks. It catches the authentication amnesia from Chapter VI where deactivated users retained admin access.

Traditional monitoring tells you what happened. Business-Use tells you if it happened correctly.

The Complete Lightweight Process

AI generates code → Run smoke tests automatically in CI/CD → Deploy behind feature flag → Execute integration tests → Validate business flows with Business-Use → Canary release to 5% → Monitor golden signals → If green after 24 hours, expand to 25% → If still green, full rollout → Maintain monitoring

This process takes minutes to hours while providing multiple checkpoints where issues surface before affecting all users.

The desplega.ai Solution: E2E Testing for the Vibecoding Era

Traditional testing tools assume humans understand the code. desplega.ai assumes you don't—and builds around that reality.

Low/No-Code Test Generation

Generate E2E tests without relying on a working version. Describe what you want tested in natural language and let AI create the test automation. This aligns perfectly with vibecoding's prompt-driven development philosophy.

Check out our QA-Use MCP server that integrates directly with Claude, Cursor, and other AI coding assistants. You can generate comprehensive test suites through the same conversational interface you use to generate code.

Self-Healing Tests

Tests that are agnostic to visuals and adapt to your changing application. When AI refactors your UI (as we saw with v0 in Chapter V: The Pixel Perturbation), desplega.ai's tests automatically adjust without breaking.

This addresses the maintenance nightmare that kills traditional test automation when code changes daily.

Business-Flow Validation

As detailed in Foundation Book V, desplega.ai tracks business flows through your entire stack—backend, frontend, third-party services—and validates that critical paths execute correctly.

Example: When Replit's AI deleted the production database (Chapter III), business-flow validation would have caught the unauthorized DELETE operation before it executed.

Continuous, Scalable Runs

Multiple test runs ensuring your application stays bug-free as you ship. Designed for teams deploying multiple times daily with AI assistance.

The Integration Layer

desplega.ai works with your existing CI/CD:

- GitHub Actions / GitLab CI integration

- Playwright-based execution (the industry standard)

- MCP protocol for AI coding assistant integration

- RESTful API for custom workflows

As we demonstrated in Chapter III: The Replit Regression, you can bridge the gap between Replit's rapid development and reliable testing by connecting desplega.ai to your deployment pipeline.

Vibecoding Testing vs Traditional QA: The Comparison

| Dimension | TDD/BDD | Traditional QA | Vibecoding | Hybrid + desplega.ai |

|---|---|---|---|---|

| Philosophy | Test-first design | Comprehensive validation | Execution-only | Risk-based strategic |

| Coverage | 80-100% | 60-80% | 10-30% | 40-60% focused |

| Speed | Slow | Medium | Very Fast | Fast |

| Maintenance | High | High | Minimal | Self-healing (low) |

| Best For | Mission-critical | Enterprise SaaS | Prototypes, MVPs | Modern product companies |

| Worst For | Rapid prototyping | Fast-moving startups | Payment/security systems | Extremely simple projects |

| AI Integration | Difficult | Difficult | Native | Native |

When Each Approach Makes Sense

TDD/BDD: Payment processing, healthcare, security-critical systems, complex business logic, regulated environments. Organizations: Banks, healthcare providers, infrastructure companies.

Traditional QA: Enterprise SaaS, embedded systems, extensive compatibility requirements, teams with dedicated QA expertise. Organizations: Established software companies with predictable release cycles.

Vibecoding + Lightweight Testing: Personal projects, early MVPs (first 100 users), internal tools, prototypes, content sites, features behind flags. Organizations: Solo founders, prototype teams.

Hybrid + desplega.ai: Modern SaaS companies shipping continuously, startups past product-market fit, platform businesses with API guarantees, companies with mix of critical and non-critical features. Organizations: Fast-growing tech companies like Stripe, Vercel, Shopify.

As documented in Test Wars Episode IX: Code Wars, no-code platforms combined with strategic testing offer an escape from vibecoding chaos while maintaining velocity.

FAQs: Testing in the Vibecoding Era

Can you ship quality code without tests?

Short answer: No—not sustainably.

While individual features might function correctly, quality at scale requires systematic validation. Stripe's engineering principle: “Every great product requires both polish and pace—they're not opposites”.

The GitLab database deletion incident (300GB lost, 6 hours of production data permanently gone) demonstrates catastrophic consequences of skipping tests and backup validation [46]. Multiple backup mechanisms had failed silently because no one had tested recovery procedures.

IBM's research: products with lowest defect rates have shortest delivery schedules [17]. The mechanism is clear—bugs found in production take 100-1000x longer to fix than bugs caught during development [9].

What's the minimum viable testing strategy?

Three foundational elements:

- Smoke tests (under 5 minutes, verifying app starts, critical paths work, API contracts intact) [28][29]

- Monitoring (golden signals: latency, traffic, errors, saturation)

- Rollback capability (undo deployments in under 5 minutes, ideally via feature flags) [33]

Beyond this foundation, scale testing with business impact. Critical systems demand integration and E2E testing [27]. High-traffic features benefit from load testing and canary analysis [34]. User-facing experiences need cross-browser and visual validation.

For vibecoding teams, add Business-Use instrumentation on critical flows to validate business logic correctness beyond technical correctness.

How do you prevent regressions when vibecoding?

Traditional regression prevention assumes humans comprehend code. Vibecoding demands different techniques:

- Self-healing test automation (mabl, Applitools, Testim) using ML to adapt tests

- Contract testing protecting integration boundaries (Pact, Spring Cloud Contract)

- Production monitoring as comprehensive regression testing with real traffic

- Canary deployments with automated metrics comparison and rollback [34]

- Feature flags enabling progressive rollout with instant revert [33]

Business-Use framework specifically for vibecoding: validates business flows work correctly even when implementation changes underneath.

The hard truth: vibecoding without regression prevention is Russian roulette with customer trust. Neither the developer nor the AI model maintains a comprehensive mental model of system behavior. Organizations must compensate with better automation, monitoring, and rollback mechanisms.

What tools do I need to get started?

Essential toolkit costs $0-500/month for small teams:

CI/CD & Testing:

- GitHub Actions / GitLab CI (free for public, $4/seat/month private)

- Playwright or Cypress (free open-source)

AI-Assisted Testing:

- testRigor (free tier, ~$100/month scale)

- Katalon free edition (<5 people)

Monitoring:

- Sentry free tier (5,000 errors/month)

- Prometheus + Grafana (free, self-hosted) OR New Relic free tier (100GB/month)

Feature Flags:

- Unleash (free, self-hosted) OR LaunchDarkly (10K events/month free) [33]

Visual Testing:

- Percy (free for open source, $299/month commercial)

- Applitools ($49/month)

Business-Flow Validation:

- desplega.ai with Business-Use framework (open-source, MIT license)

- QA-Use MCP server for AI assistant integration

Total: $100-500/month represents 0.2-1% of total engineering costs—trivial insurance against incidents costing $14,056/minute [10].

Does AI make testing obsolete?

No—AI shifts testing from implementation detail to intent validation, making it MORE critical.

Kent Beck argues TDD becomes a “superpower” when working with AI agents precisely because AI introduces regressions humans don't anticipate [38]. AI optimizes for passing the immediate prompt without maintaining architectural coherence or considering edge cases.

Natural language test creation (testRigor, LambdaTest) means non-programmers can specify test cases. Generative AI creates test data, generates test scripts, identifies coverage gaps. This democratizes testing while potentially increasing coverage.

The critical distinction: AI generates code, but humans must validate intent. Simon Willison's vibecoding definition—accepting AI output without review—represents the boundary between using AI as assistant versus abdicating responsibility [4].

The Replit database deletion and Base44 authentication bypass show AI following instructions literally while missing contextual intent [12]. Humans must specify what constitutes success—business logic, user expectations, error handling, edge cases require human judgment.

How do I convince stakeholders to invest in testing?

Frame testing as revenue protection and velocity enabler:

Cost avoidance math: “A single 1-hour incident costs us $843,360 at $14,056/minute [10]. Our $5,000/month testing investment pays for itself if it prevents just one 5-minute incident per month.”

Velocity data: Top-quartile companies achieve 4-5x faster revenue growth [16]. “Investing in testing means we ship MORE, not less, because we spend less time fixing production issues.”

Competitor examples: Stripe maintains 99.999% uptime while shipping multiple times daily. “Do we want to compete against companies achieving both speed and quality?”

Developer productivity: “We spend $1.5M annually on reactive bug fixes (20% of 50 developers at $150K) [15]. Investing $500K in testing could cut this 50%, saving $250K yearly while improving morale.”

ROI studies: 205% ROI over three years (Forrester) [19], $240K+ savings vs Selenium (mabl), $1M+ annual savings from visual testing (Applitools).

Customer impact: 68% abandon after 2 bugs, 81% lose trust after failures [13]. “How many customers can we afford to lose before testing becomes cheaper than churn?”

Disaster cases: Knight Capital's $440M loss in 45 minutes [14], GitLab's 18-hour outage [46], Cloudflare global outage [51].

For startups: “Modern smoke tests run in 5 minutes, catch 80% of critical issues, cost ~$100/month. That's 0.1% of runway for massive downside protection. Let's pilot on our three highest-risk features and measure time spent firefighting before and after.”

Conclusion: The Vibecoding Testing Imperative

The vibecoding movement represents software development's inflection point—when code generation speed exceeds most teams' ability to validate what they're shipping. This creates extraordinary opportunity and existential risk operating simultaneously.

The data demolishes false choices. McKinsey proves top-quartile companies achieve 4-5x faster revenue growth precisely because they invest in quality practices [16]. IBM's 1970s findings remain validated: lowest defect rates correlate with shortest schedules [17]. CodeScene confirms excellent code health enables 33% faster iteration while poor quality creates 10x slowdowns [18].

The Framework for 2025

Classify all code by business criticality. Payments, authentication, data operations demand comprehensive testing with integration suites, staged rollouts, production monitoring [27]. Internal tools, experimental features, non-critical UIs proceed with smoke tests and quick rollback [27].

The investment is modest but non-negotiable:

- $100-500 monthly in tooling

- 5-10% of engineering time for test maintenance

- Infrastructure for smoke tests and monitoring

- Cultural commitment to quick rollbacks

- Business-flow instrumentation with desplega.ai

This represents 1-2% of total engineering costs—trivial insurance against $14,056/minute incidents [10] and customer churn costing far more.

The Competitive Implications

Your competitors use AI to ship faster. If you match their speed without quality practices, you'll lose to production incidents, customer churn, and developer burnout. If you maintain traditional quality practices without AI acceleration, you'll lose to time-to-market.

The only winning strategy: AI velocity + modern testing practices.

As we've documented throughout the Vibe Break series—from Replit's deployment black holes to Lovable's data leaks to v0's pixel perturbations to Base44's authentication amnesia—every vibecoding platform faces the same challenge.

The solution isn't abandoning AI tools. It's building testing infrastructure that matches AI's speed.

The Path Forward

This week: Audit your three highest-risk systems.

This month: Deploy smoke tests and monitoring for them.

This quarter: Adopt desplega.ai for AI-native testing.

Ongoing: Track time-to-detect and time-to-resolve for incidents.

The vibecoding era doesn't mean testing matters less—it means testing must evolve to match new development speeds. Companies embracing lightweight, strategic, AI-augmented testing will ship faster, fail less, and grow more than anyone thought possible.

Those treating testing as optional will provide the next generation of $440 million postmortems [14].

The question isn't whether to test vibecoded software. It's whether you'll engineer quality into your speed or learn its importance through production disasters.

How desplega.ai Helps

We built desplega.ai specifically for teams navigating the vibecoding paradox. Our platform provides:

- ✅ E2E test generation from natural language descriptions

- ✅ Self-healing tests that adapt to AI-refactored code

- ✅ Business-flow validation catching logic errors functional tests miss

- ✅ MCP integration with Claude, Cursor, and AI coding assistants

- ✅ Continuous test execution for teams deploying multiple times daily

References

- Andrej Karpathy on Vibe Coding - Twitter twitter.com

- Vibe coding - Wikipedia wikipedia.org

- Who and how is driving the vibe coding revolution - Vestbee vestbee.com

- Vibe coding - Simon Willison simonwillison.net

- Cowboy coding - Wikipedia wikipedia.org

- What Is Vibe Coding? - IBM ibm.com

- Move Fast and Break Things - Snopes snopes.com

- Why Ship Fast for Solopreneurs - ShipFast shipfast.dev

- How Much Do Software Bugs Cost? 2025 Report - CloudQA cloudqa.io

- IT Outage Costs 2024 - BigPanda bigpanda.io

- Software Incidents: What They Really Cost You - Spike spike.sh

- Vibe Coding Is Not the Same as AI Agents - Addyo substack.com

- Software Errors: Real-World Business Disasters - Aspire Systems aspiresys.com

- Knight Capital: A Financial Disaster - SpecBranch specbranch.com

- Cost of Software Errors - Raygun raygun.com

- Developer Velocity - McKinsey mckinsey.com

- Software Quality at Top Speed - Steve McConnell stevemcconnell.com

- Code Quality: Speed vs Quality Myth - CodeScene codescene.com

- Mainframe Automated Testing ROI - BMC bmc.com

- Forrester TEI of Continuous Quality - Micro Focus microfocus.com

- ROI of Test Automation - IT Convergence itconvergence.com

- Optimizing IT Productivity - McKinsey mckinsey.com

- 7 AI Test Automation Tools - TestGuild testguild.com

- Technical Debt - Gartner gartner.com

- Technical Debt Whitepaper - TinyMCE tiny.cloud

- The Startup Dilemma - Pragmatic Engineer pragmaticengineer.com

- 4 Ways to Ship Smarter - LeadDev leaddev.com

- Smoke Testing - Wikipedia wikipedia.org

- Critical Mass of Tests - CloudBees cloudbees.com

- Key Considerations: Test Coverage - Centercode centercode.com

- Testing in Production - LaunchDarkly launchdarkly.com

- Testing in Production: Tools - Lightrun lightrun.com

- Testing in Production: The Safe Way - CopyConstruct medium.com

- AWS S3 Outage 2017 - AWS aws.amazon.com

- Self Testing Code - Martin Fowler martinfowler.com

- TDD, AI Agents and Coding with Kent Beck - Pragmatic Engineer pragmaticengineer.com

- Is TDD Dead? - Martin Fowler martinfowler.com

- Test-induced Design Damage - DHH dhh.dk

- Building a Culture of System Reliability - Stripe stripe.com

- 13 Sentences About Startups - Paul Graham paulgraham.com

Related Posts

Vibe Break - Chapter I: The Vibe Abstraction

Vibe coding lets anyone build real apps by describing what they want in plain English. Learn how AI is democratizing software creation and freeing engineers to solve harder problems.

Vibe Break - Chapter II: The Expensive Canary Divergence

AI-powered "vibe coding" tools can build apps in hours, but skipping proper testing leads to database deletions and $700+ API bills. Learn regression testing and canary releases for safe AI development.

Vibe Break - Chapter VIII: The GitHub Migration Protocol

Complete 1-hour migration guide from Lovable/Replit to GitHub. Learn CI/CD setup, testing with Vibe QA, and Claude Desktop integration for safe platform transitions.